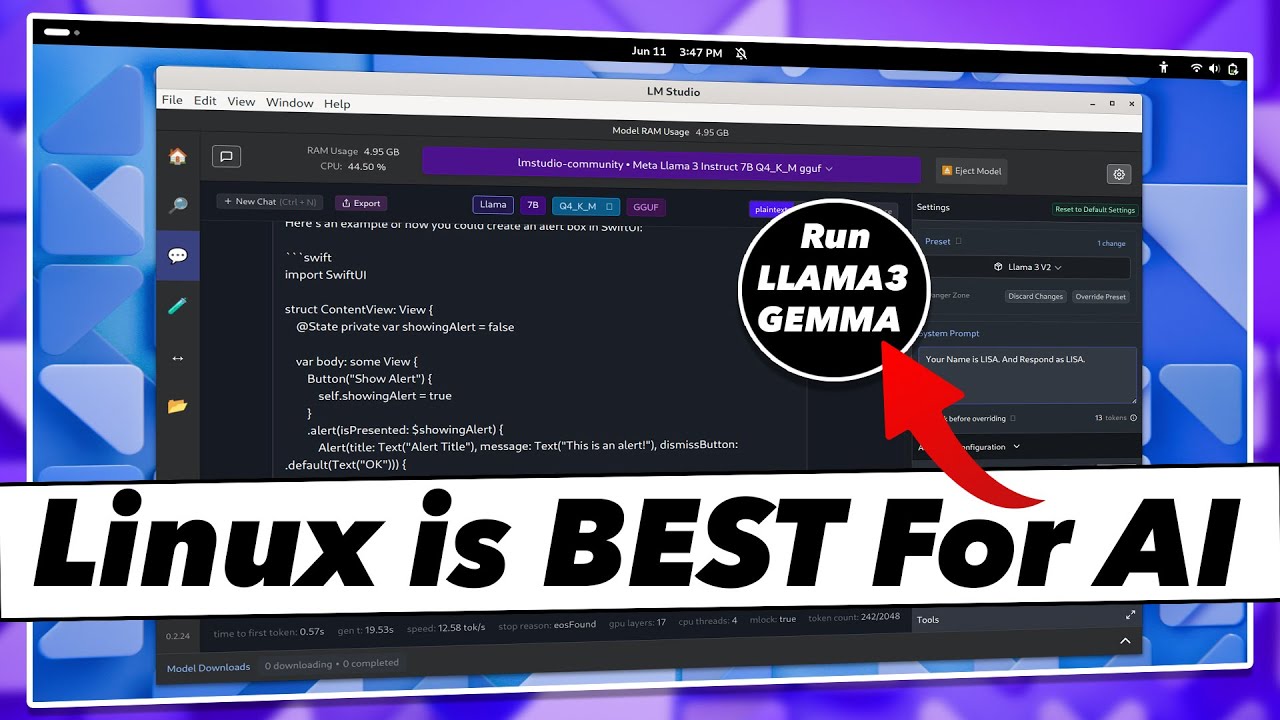

What are your thoughts on #privacy and #itsecurity regarding the #LocalLLMs you use? They seem to be an alternative to ChatGPT, MS Copilot etc. which basically are creepy privacy black boxes. How can you be sure that local LLMs do not A) “phone home” or B) create a profile on you, C) that their analysis is restricted to the scope of your terminal? As far as I can see #ollama and #lmstudio do not provide privacy statements.

But it’s accurate? Doesn’t mean that human looking text can’t be helpful to some, but it’ll also help keep us grounded to the reality of the tech.

It’s not a room of monkeys typing on keyboards. Calculators don’t just spit out random numbers. They use the input to estimate the best solution it can.

I know being anti-AI is popular right now, but y’all are being dishonest about its capabilities to backup your point.

They’re Large Language Models. They’re defined as generative pre-trained text transformers, that’s their entire purpose.

Saying the calculator spits out random numbers would be wrong, but saying a calculator spits out numbers, that would be correct. Reductionist would probably be a better word than regressive or asinine.